Features

Resources

Deploy Dify on Zeabur: The Ultimate LLM App Development Platform

How to build secure, production-grade AI Agents without the backend headache.

Introduction

The release of GPT-4 and the subsequent explosion of Large Language Models (LLMs) triggered a new era of AI Agents gold rush. Every developer, product manager, and CTO immediately recognized these potential: automated support, intelligent search with those intelligent agents.

But as the initial excitement settled, the "implementation wall" appeared. You have the model, but how do you build a secure, reliable application around it? How do you make it know your business? How do you stop it from lying?

One of our answer is Dify.ai.

What is Dify?

At its core, Dify (A.K.A. Define + It + For + You) is an open-source platform tailored for LLM application development. It solves the fragmentation problem in modern AI development by integrating two critical concepts:

- Backend as a Service (BaaS): It will handles all the heavy lifting—don’t worry about database management, API generation, or even server logic—so if you are a frontend developers can just "plug and play."

- LLMOps (LLM Operations): It provides a full suite of tools to monitor, manage, and refine the performance of your AI models over time.

Dify is unified platform that allows all kinds of developers to create production-grade Generative AI applications rapidly.

Access leading AI models with transparent pricing on Zeabur AI Hub.

Under the Hood: The Technology Stack

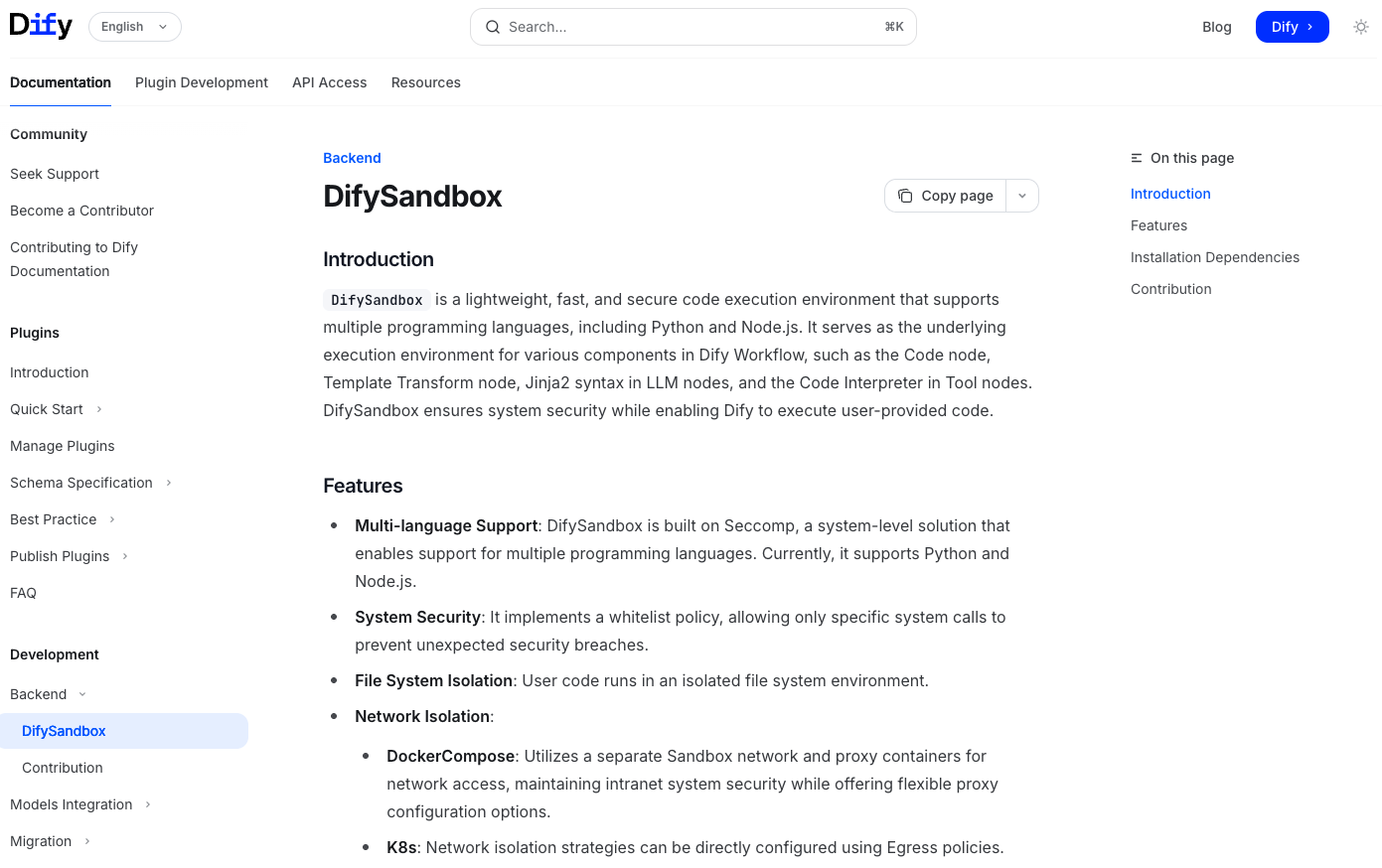

Dify isn't just a wrapper; it’s a comprehensive toolkit designed to save you from reinventing the wheel.

- Model Agnostic: Dify ensures you aren't vendor-locked. It supports hundreds of models (OpenAI, Anthropic Claude, Llama via Ollama, etc.). You can switch models with a click to balance cost vs. performance.

- Prompt Orchestration: A visual "playground" allows you to tweak prompts and see results instantly. This is where "Prompt Engineering" becomes accessible to the whole team.

- Flexible Agent Framework: Beyond simple chat, Dify allows you to build Agents that do things—breaking down complex goals into steps and executing tasks automatically.

- Instant API Generation: The moment you design an app in the UI, Dify generates a standard API for it. Your frontend team can start integrating immediately.

4 Reasons you should use Dify : Solving Problems with Dify Arsenal

If you have ever tried to build a custom AI app from scratch using raw APIs or complex libraries,

you have likely hit specific roadblocks. But with the power of Dify, you don’t have to worry about those problems anymore.

1. "How do I 'train' a model on my own data?"

The Problem: Public models (like standard ChatGPT) don't know your company's internal wikis, PDFs, or customer support logs. The Dify Solution: You don't actually need to "train" (which is expensive and slow). Dify provides a high-quality RAG (Retrieval-Augmented Generation) Engine. You simply feed the essential data, and Dify will handles the rest(segmentation, indexing, and embedding). It turns your static files into a searchable brain for the AI.

2. “How to setup those workflow”

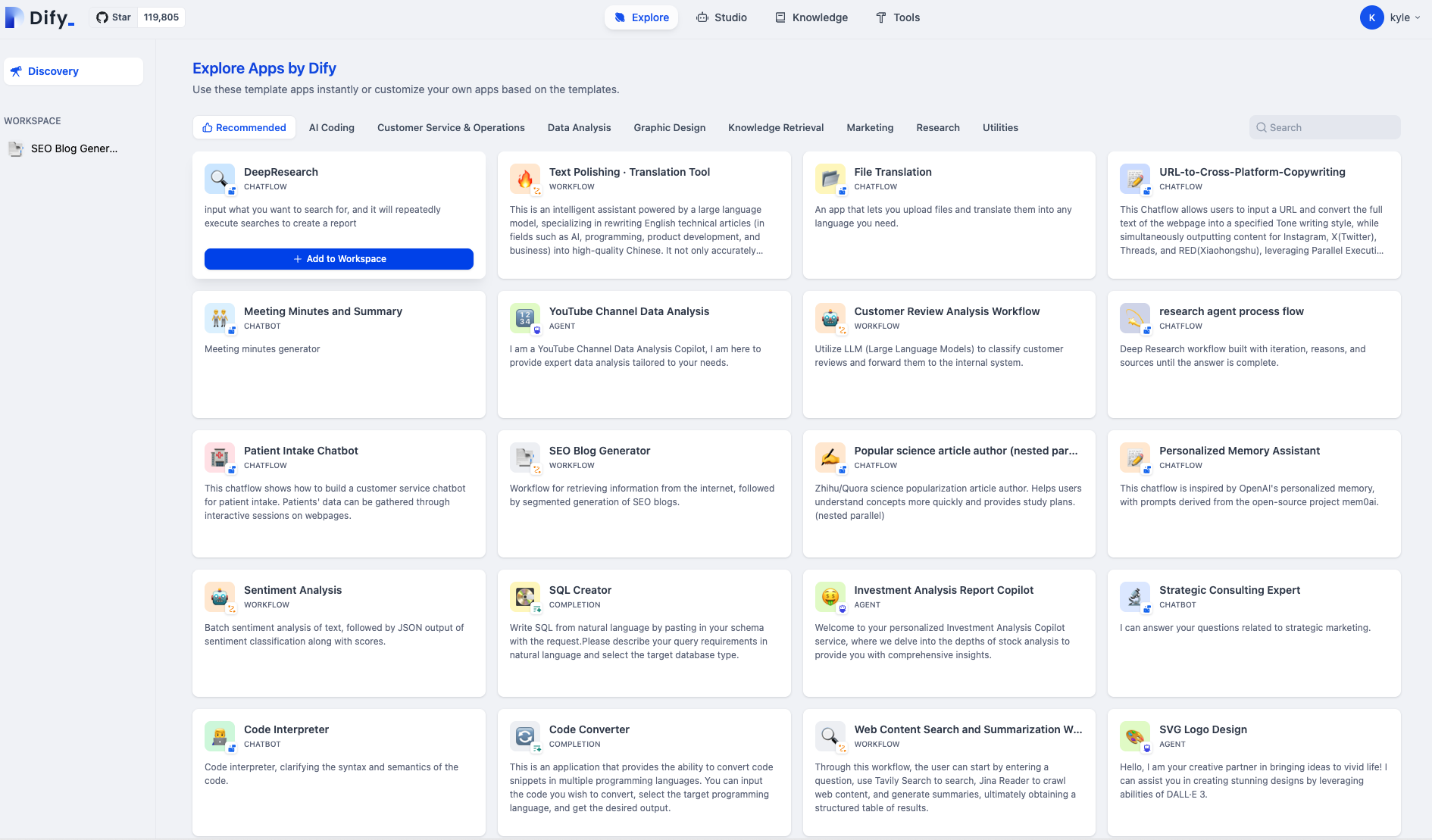

The Problem: If you are a beginner of n8n, you probably can’t understand how to build the workflow, but don’t worry, Dify already made some useful workflow and tested by other Dify user. The Dify Solution: You can go to the explore page in Dify, there are so many state-of-the-art workflow being built and tested already, just like zeabur, one click and you all good.

3. "How do I prevent the AI from lying (Hallucinations)?"

The Problem: LLMs sometimes will change to become confident liars. If they don't know an answer, they often make one up. The Dify Solution: By using the RAG capabilities mentioned above, Dify strictly grounds the model. You can configure the system to answer only based on the context provided in your knowledge base, significantly reducing misinformation and ensuring the AI acts as an expert on your specific domain.

4. "I’m a Frontend Dev... do I really have to build a Python backend?"

The Problem: To build a secure GenAI app, you usually need a Python backend (using LangChain or LlamaIndex) to manage API keys, context, and vector databases. For frontend developers or product managers, this infrastructure overhead is a massive barrier to entry.

The Dify Solution: Dify acts as a BaaS (Backend-as-a-Service). The moment you configure your agent in the Dify UI, it automatically generates a secure, production-ready API for that specific agent. Your frontend team can simply hit this API to send messages and receive answers, completely bypassing the need to build and maintain a custom backend server.

Access leading AI models with transparent pricing on Zeabur AI Hub.

The Strategic Advantage: Privacy and Control

While tools like OpenAI’s "Assistants API" or custom "GPTs" are powerful, they often require sending your data into a "black box" ecosystem.

Dify offers a distinct alternative. Because it is open-source, you can self-host it. This gives you:

- Full Data Sovereignty: Your private data stays on your servers.

- No Vendor Lock-in: You own the orchestration layer.

- Domain Expertise: By refining the knowledge base and prompts locally, you build an asset that is unique to your business, free from the generic biases of public models.

Conclusion

The goal of Dify is simple: Let developers focus on innovation, not plumbing.

By standardizing the backend and operations of AI, Dify allows you to move from "Hello World" to a fully functional, domain-specific AI application in a fraction of the time. Whether you are a solo developer or an enterprise looking to deploy secure internal tools, Dify provides the architecture to make your AI useful, accurate, and reliable.

Access leading AI models with transparent pricing on Zeabur AI Hub.

Ready to build? Deploy the Dify Template on Zeabur or Sign up for Zeabur today to start your first Agent!