2025/8/26 Incident Report: Abnormal Behavior During zbpack Redeployments

Recently, due to the deprecation of the existing zbpack v1 infrastructure, Zeabur has been rolling out the next-generation build system (zbpack v2) to all users. We are aware that this migration introduced certain compatibility issues. This post explains the reasons, how we addressed them, and how you can mitigate the issues if you encounter them.

Why roll out the next-generation build system to everyone?

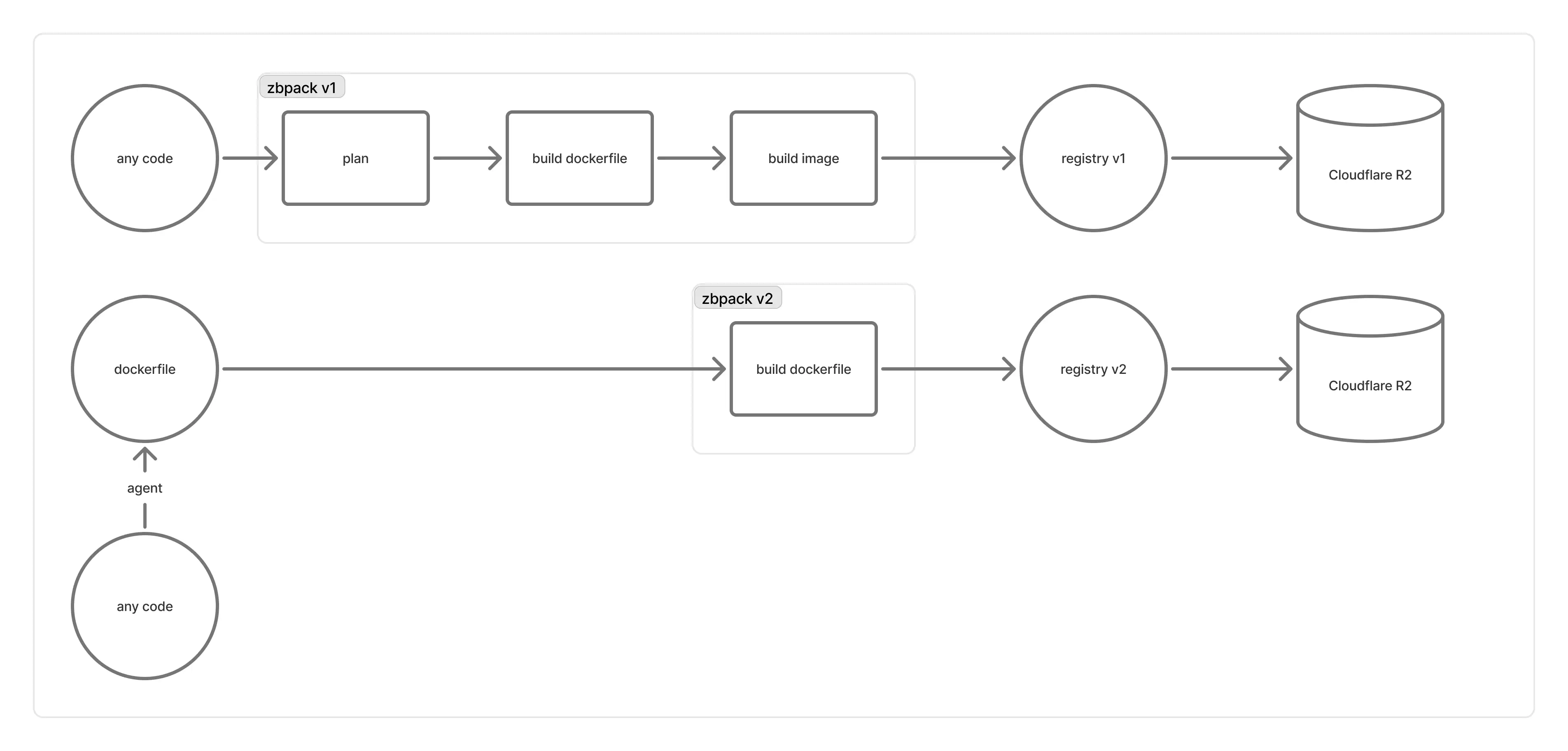

Here is how the build infrastructure behind Zeabur works:

Registry v1 was built using the distribution/distribution registry. A number of issues with it prompted us to migrate to a self-designed registry v2:

- Early on, each build machine came with its own registry (one registry per build machine). These machines were short‑lived, and there wasn’t a good way to restrict containers to only talk to their own machine’s registry. Occasionally the registry exited before the build container, causing builds to fail (retries weren’t very effective).

- Later, we moved registries to longer‑lived machines and had build containers connect to a load‑balanced registry. However, when writing image manifests, the registry handling blob uploads might not have finished uploading yet, or Cloudflare R2 lagged in updating, leading the registry to return

blob unknownand reject the manifest. As a result, images couldn’t be pulled at startup. - Additionally,

distribution/distributionfavors global blob deduplication, which prevents us from knowing which blobs are unused without reading all manifests. The official garbage collection tool requires stop‑the‑world downtime. At Zeabur’s registry scale this was unworkable, causing a huge accumulation of blobs in R2 and severe performance issues. - Many users also reported extremely slow upload speeds via registry v1.

We designed registry v2 to build OCI images and push them directly to an R2 bucket, then use Cloudflare Workers to expose a read‑only Pull API that maps the bucket’s OCI image layout to the OCI Distribution Specification. This dramatically improves performance, maximizes multipart upload efficiency, and avoids many registry‑related issues. At the same time, we scope blob deduplication within each repository, so deleting a repository allows us to GC its blobs—greatly simplifying operations without stop‑the‑world downtime.

However, as you can see, the push flow changed significantly in registry v2. This has already been implemented in zbpack v2, but zbpack v1’s complex push process and heavy reliance on the buildkit CLI for image building made porting difficult. For roughly the past month, projects still on zbpack v1 continued to build against registry v1, and we only switched them to zbpack v2 manually when users reported zbpack v1 failing to start.

As registry v1 became increasingly overloaded and error‑prone, and related support tickets surged, we had to consider moving the image‑handling part of zbpack v1 to zbpack v2.

Why did the new build system have so many issues?

Sharp‑eyed developers may have noticed that the zbpack (v1) GitHub repo was un‑archived and received many Dockerfile‑related updates. In fact, this was preparation for the compatibility layer that bridges zbpack v1 to zbpack v2.

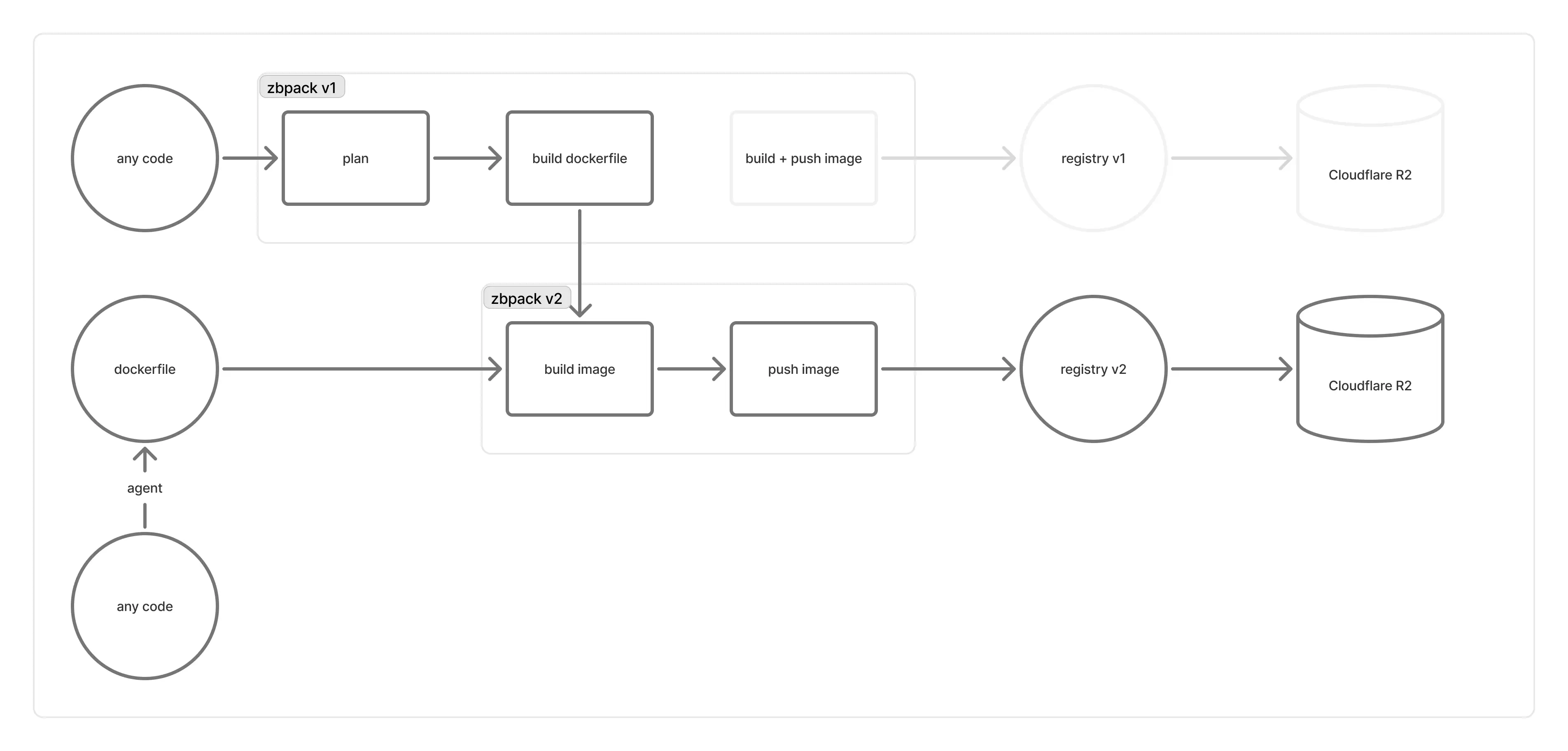

We wanted to keep zbpack v1’s Dockerfile generation capability but switch to zbpack v2 for the actual build after the Dockerfile is produced. Therefore, we exposed v1’s Dockerfile generation as a public function, had the build service call it to generate a Dockerfile, and then passed that to the build machines running zbpack v2.

However, zbpack v1 was originally designed to run on build machines, so many parts needed to be implemented or adapted:

- zbpack v1 depends on many environment variables, but we cannot change the build service’s environment variables.

- zbpack v1 was designed as a one‑shot CLI. Directly wiring it to the build service could trigger internal panic logic.

- The build machines behind zbpack v1 passed many specialized parameters to zbpack v1; we had to re‑implement each of these in the compatibility layer.

Therefore, we implemented a zbpack v1 compatibility layer that mirrors how the v1 build machines invoked zbpack v1. We had already fixed most obvious issues (like code detection) before the global rollout, and internal tests on staging machines didn’t show mis‑detections. On‑call engineers also closely monitored the rollout. However, once deployed to all machines, we discovered many new issues that the compatibility layer hadn’t accounted for. For example:

- Environment variables required by zbpack were not correctly passed through, causing variables prefixed with

ZBPACK_to be ignored. - The project “root directory” was not correctly passed to zbpack v1, so it kept inspecting the filesystem from the system root.

- The zbpack v1 version used by the compatibility layer made large changes to Dockerfile logic that our tests did not properly cover, causing

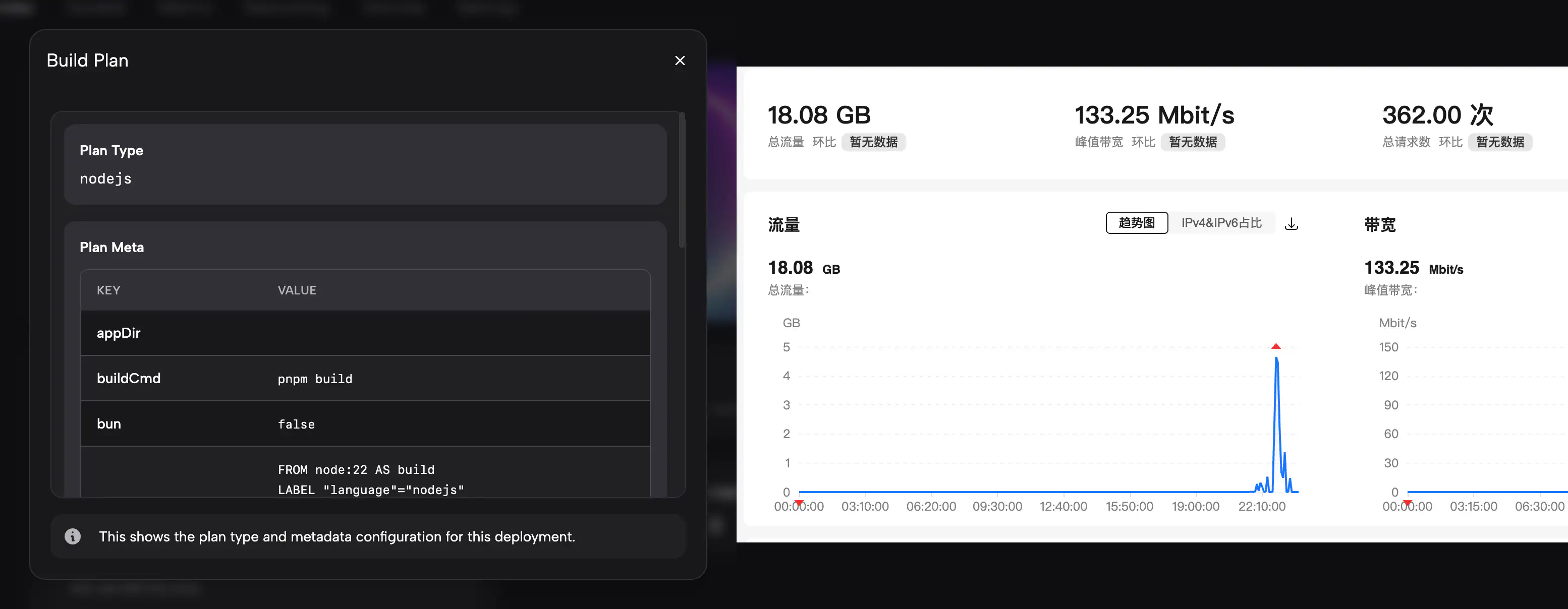

ZBPACK_DOCKERFILE_NAMEbehavior to differ from before. - Many users consider plan type and plan meta important, but the compatibility layer did not display them correctly in build logs as before.

- Registry v2 was extremely slow when accessed from Mainland China.

Given that some scenarios lacked representative test environments, and rolling back could have had a larger impact, during on‑call we chose to proceed and iterate quickly—fix, test, and roll out—while also providing workarounds to customers. Between 8/26 and 8/27 we quickly completed the zbpack compatibility layer, added plan type and plan meta displays, and set up a Mainland China CDN to accelerate registry v2 downloads.

We sincerely thank all customers who reported these issues; your feedback helped surface edge cases the compatibility layer missed and prompted us to investigate and fix them.

Will this happen again?

The issues mentioned above have been implemented or fixed in the compatibility layer. If anything else remains incomplete, please open a ticket and our engineers will take care of it.

Known issues at the moment:

- Newly deployed services might have their exposed port reset to the default port 8080. The root cause is still under investigation. As a temporary workaround, explicitly set

PORT=<public port>(e.g.,PORT=8080). - If you deploy with a Dockerfile and it is not read correctly, go to “Settings” > “Dockerfile” and manually paste the Dockerfile content you want to use. This should already be fixed, but we would appreciate a ticket so we can investigate the root cause.

Looking back at this incident, the core causes include:

- Too few internal test cases, especially around project roots and Dockerfile names. We mistakenly believed the compatibility layer covered all environments and treated this as a small change suitable for a global rollout.

- Any feature that changes behavior (such as the “zbpack v1 compatibility layer”) should implement and follow a feature‑flag mechanism similar to zbpack v2, rather than being rolled out to everyone at once.

- Insufficient awareness of Mainland China’s network conditions; we incorrectly applied overseas assumptions and did not implement a CDN in advance.

- We should have tested with a smaller customer cohort and collected feedback earlier.

If you were affected by this incident, we can provide credits proportional to the duration of impact as compensation. We will be more cautious with future rollouts of these features, and we greatly appreciate everyone who helped uncover issues in the compatibility layer.